My question for Myke & Jason got answered on today's Upgrade!

I'm working on my Maverick upgrade that I wrote about last night, and also moving up to using Swift 5.1.1 in Docker. The infrastructure upgrades for Docker/Swift/Vapor are proving the most difficult part of the project.

Rethinking Metadata

Last year when I started thinking about moving away from Ghost as my blogging platform, the first place I turned was Jekyll. It's really popular and can create great websites. Plus it's nerdy, which is usually right up my alley. I found an exporter from Ghost to Jekyll which worked quite nicely. But one thing that did rub me wrong was that posts were separated from their associated media assets. This is why I started looking at textbundle packages.

I really like encapsulating each post in a single file, with assets and text contained inside. And, because I went from Ghost to the Jekyll format directly, I left the metadata at the top of a post's text.md file inside. The metadata (or FrontMatter) contains things like the post title, date, tags, etc. As it is currently, there's a chunk of yml at the top of each of my markdown files containing this metadata. I'm starting to rethink this approach now, for a couple of reasons:

- I want to build a textbundle editor that can work not just with files for my Maverick-powered blog, but anyone who wants to write using that format.

- Currently I have to open the main text of each post file to get at its metadata. While I haven't done any benchmarking on the server, I suspect this isn't the most optimal way of doing things. This unknown overhead has made me hesitant to implement some new features.

So, with the decision seemingly made that I want to investigate moving metadata somewhere else, where should I start putting it? Turns out that textbundles have an info.json file inside. I'd always just hard-coded its contents when I'm creating new bundles and moved on. But when I look at the documentation for that file I found this gem:

Additionally, the meta data file can contain application-specific information. Application-specific information must be stored inside a nested dictionary. The dictionary is referenced by a key using the application bundle identifier (e.g. com.example.myapp). This dictionary should contain at least a version number to ensure backwards compatibility.

Turns out my custom metadatas source has been in front of my face all along. I'll need to write a script to update all of my existing posts (which I don't anticipate will be too difficult), and from there update my Maverick code to handle the new location of the metadata. I sure hope this will only take a few days to get done.

It's been a bit since I did much significant work on Maverick and I'm really excited to dig back in.

Radio Silence

I never intend for droughts in making new posts. Often times I'll make a post then not even realize how much time goes by before the next one. Lately finding things that I consider worthwhile to post has been difficult. Work has been crazy, I took on a side project for a client, and we got our kids in the swing of school for the first time (Atticus is in full-day kindergarten and Finnian 2 mornings of preschool per week).

I usually like writing about things that I hope will help people in some way, or at the very least will help me get to a destination that I'm seeking for (hence the SwiftUI posts most recently). But the problems I've had to solve at work and for the side project are both specific to those codebases and probably not interesting to others or things I shouldn't be sharing about. So I go silent.

I don't like being silent. I can easily find myself in funks where I just feel down for no real reason. When that happens I can be short with my kids, quick to voice displeasure or other negative thoughts at work, and get in to a pattern of feeling stuck. Like I'm not able to think effectively. I like writing. I like sharing tips with fellow devs. Sometimes I even like sharing bits about more personal things. And when I'm not, that can easily lead to a pattern of inactivity that I don't like.

So when today, on November 1, I saw several people talk about their plans to blog daily during the month. I won't make that commitment, since that's usually a recipe for me to not follow through. But I do plan on writing more. About what, I'm not sure.

At the very least, I have some ideas of how to make my blogging engine better. And when I start doing that, it's goo source material. I'd also love to finish my Scorebook update for iOS 13. And who knows what else. So, let's make this a good November.

And if you have any ideas of topics you'd like to see me talk about, please drop me a line.

Examples of My SwiftUI Struggles

In my last post I talked about some of the struggles I'm having getting up to speed with SwiftUI. Let's dive in to a couple of examples.

Magic Environments

To make my Settings form view, I found a suer helpful blog post by Majid Jabrayilov where he goes over the basics of building a Form with SwiftUI and binding some controls to UserDefaults. He outlines a type called SettingsStore, which I also implemented. I diverged from the tutorial to try my hand at making a property wrapper type to handle fetching from and saving to UserDefaults. When it came time to wire a switch up with its associated value is where I slowed quite a bit. The post has this line of code in the SettingsView type:

@EnvironmentObject var settings: SettingsStore

What this says is that somewhere in the SwiftUI environment will be a SettingsStore instance. What's missed in the post is how the thing gets there in the first place. It turns out that an ancestor of my SettingsView needs to inject the property into the environment when also constructing the view. Here's what that looks like in Scorebook's case, where the SettingsView is visible as a tab item in the tab bar.

let hostingController = UIHostingController(rootView: SettingsListView().environmentObject(SettingsStore()))

So after putting my SettingsView inside of the UIHostingController, I also need to chain an environmentObject(_:) call to put the store in the environment. When I failed to do this at first I got a crash, and when I tried constructing the environment and passing it in via constructor injection I couldn't set the variable. It has to be done through the environment. For my taste, it's feeling a bit magic-y at the moment but I'm hopeful that's due to the fact that I don't know what I'm doing very well just yet.

Conditional View Navigation

I've got a button on my settings screen to send me an email. In UIKit land I have an action on the button and check that the device in question can send the email, then it presents the mail compose screen. If it cannot, then I show an alert. Easy enough.

But in SwiftUI land it's not so easy (at least to my imperatively wired mind). Thankfully I found a helpful StackOverflow question to help me get started with sending the message. I honestly don't quite understand exactly how the UIViewControllerRepresentable protocol works yet but I think the code in that answer gives me a good starting point. One thing that is kind of breaking my Objective-C brain is the usage of _ when setting the initial value of a @Binding property. It's like setting the instance variable used to be in days of old.

The trouble comes in triggering the mail view from my settings screen. I think I've gotten it working with a dual-boolean option (again, somehow managed via magic). Here's what I've come up with (and this is working-ish in the simulator):

struct SettingsListView: View {

// Mail

@State var mailResult: MailResult?

@State var isShowingMailView = false

@State var isShowingMailErrorAlert = false

var body: some View {

NavigationView {

Section(header: Text("Feedback".uppercased())) {

Button(action: {

if MFMailComposeViewController.canSendMail() {

self.isShowingMailView.toggle()

}

else {

self.isShowingMailErrorAlert.toggle()

}

}) {

Text("Send Email")

}

}

}

.navigationBarTitle(Text("Settings"))

.alert(isPresented: $isShowingMailErrorAlert) {

Alert(title: Text("Unable to send mail"),

message: nil,

dismissButton: .default(Text("OK")))

}

if isShowingMailView {

MailView(isShowing: $isShowingMailView, result: $mailResult)

.transition(.move(edge: .bottom))

.animation(.default)

}

}

.navigationViewStyle(StackNavigationViewStyle())

}

}

I haven't tried this on a device yet as I haven't installed iOS 13 at this point on anything (those days are nearing an end) but the Alert at least shows in the simulator. What I find kind of gross about this is the fact that I have separate @State booleans for showing or presenting content. How do those get reset when I dismiss the things they're presenting? It sounds like SwiftUI handles that but I don't know how.

I also don't like taking what was one single method in my UIKit code and scattering its pieces in a declarative spew of state all around my view code. I'm hoping there's a nice, Combine-y way of doing this that can let me isolate the states in clearer fashion.

Lots to learn still, which makes this exciting and frustrating all at the same time!

Struggling With SwiftUI

SwiftUI was the big thing coming out of WWDC in June. It was the toast of the town and I saw lots of comments about how developers "wanted to rewrite their entire app" using it. Heck, I was one of those voices too. I think it's clear that SwiftUI is the future of writing interfaces for Apple's platforms.

When it came time to start working on Scorebook's iOS 13 update I decided to take a pragmatic approach. After all, my app's users won't care if the UI is SwiftUI or UIKit. They won't care if it's written in Swift or Objective-C, built in code or Interface Builder. They want the app to work, and to work well. How it gets there is my concern as the developer. So instead of doing the fun SwiftUI update I started in on things that they will care about. So I started with Dark Mode.

There are some rough spots on the main screens but when I got to Settings, everything was wrong. "Perfect!", I thought. I wanted an easy way to get started with SwiftUI, and with SwiftUI I get dark mode support for free. I built my Settings screens as static UITableView subclasses and there's a bunch of logic that can be factored out and made simpler to handle switch taps as well as the actions like sending a support email or triggering the rating dialog.

Getting the bare form up and running was pretty easy all told. I'm not running Catalina yet so I don't get the live previews. I had to shim the SwiftUI view into my app's tab bar, which I accomplished with a UIViewController which embeds a UIHostingController that has my SettingsListView as its root view. I did this to preserve getting the tab item for the Settings tab from the UIKit object rather than trying to see if the SwiftUI view can provide it. Then came binding my switches to UserDefaults, and I started feeling out of my element.

When I started writing this post it got me pouring out many more words than I thought would be triggered, so I'm going to break this up into two parts. The next part I'll go over some concrete places where I've had issues and the answers I arrived at (if I got there, that is – this is all very much a work in progress).

Xcoders talk: Don't Fear the Project

Last week I gave a talk at our Seattle Xcoders meetup group. The topic was Xcode projects and I discussed the things that make up a project (targets, schemes, etc) and dissected project files themselves a little bit. The meat of the talk was then going through using XcodeGen to generate Xcode projects programmatically so you can stop checking them in to source control. This is a talk I've wanted to give for over a year and I'm glad I finally got around to it.

The really cool thing that happened (which I was not expecting) is the level of questions & discussion after I wrapped up the talk. I've not seen as many questions come out of a talk as this one had. That can generally mean 1 of 2 things. Either 1) I could have done better at explaining things or 2) The topic generated that much genuine interest in people. From what I heard it's more the latter than the former though there are definitely areas that I could have expanded on.

Many of the questions that arose came in the area of using flags for individual files in generated Xcode projects (think of toggling ARC on a per-file basis back in the day). I didn't know if that was possible, since I mainly use the file system to organize my sources and tell XcodeGen to grab files from the correctly organized folders. Turns out it is possible to do fancy things like define custom regexes to include certain files and then apply build phases or compiler flags to those matched sources (documentation). XcodeGen is even more powerful than I thought originally!

Were I to give this talk again at a conference or something I'd probably expand it to include a demo where I take an Xcode project that's already existing and adapt it to use XcodeGen, but for this talk I wanted to avoid live coding. The main reason that I delayed giving the talk at all was because I wanted to build a conversion tool. But in the end when I tried it out it was both harder than I was expecting to be, and I didn't think that building a complicated tool for someone to run once and move on from was a great use of my limited time.

We recorded video for the talk and I'll post a link when it's ready. For now, the slides can be found here.

🔗 Where is Windmill on the iPhone?

Markos Charatzas, writing about the state of his Windmill apps:

More importantly. Apple took the stance that the Command Line Tools Package is only meant to be used by developers in-house and not by 3rd parties to provide support for continuous integration systems - continuous delivery in the case of Windmill.

As developers building things for Apple's platforms we are largely operating within the walls set forth by Apple itself. There could be a whole industry of products built to support making development easier (think of things like Fastlane but easier to use).

Windmill is one such tool that looks really interesting. It currently runs on the Mac and monitors your local git repository for changes, building and running your app to make sure things don't break while you're developing. I've yet to use it personally but more importantly think it's something that should be allowed to exist. Apple should be in the business of fostering creative solutions to lots of different problems instead of shutting them out.

If Apple's stance is that it's okay to build workflows and internal apps on these command line tools, then why is it not okay to try to make a product out of them? It seems to me that forcing every place to deal with these issues individually will waste a lot of time and add tons of friction to workflows that could be eased with these tools.

This is one case where I wish Apple would be more conversational and clear. Markos has commendably been trying to get a conversation started. But oftentimes these "discussions" are one sided, with interested third-party developers being the ones talking and patiently waiting for Apple to respond. I'd love to see that change someday but the change will have to be on Apple's side, not ours.

Reverting SwiftPM for Tools

For the last few months I've been using Swift Package Manager to manage my tooling dependencies for iOS projects. It's a technique that I learned about from Orta Therox and I adopted it in Scorebook and even made an app template that used it too. The advantages were great, namely that I didn't have to manage any version scripts or vendor tools directories. It just worked.

But over the last couple of weeks things started to fall apart a little bit. I downloaded Xcode 11 after releasing my new Scorebook update so that I could start digging in to all the cool stuff iOS 13 had to offer. I also checked my tools to see if they had new versions available (I'm using 2 different tools at the moment – Xcodegen and swift-sh). I went to update them and that's when I got my first error: dependency graph is unresolvable.

I did some digging and what seems to have happened is that my tools have conflicting dependencies. Bummer!

I had some options at this point: 1) figure out what the conflicts were and solve them by forking and opening pull requests on one or both of Xcodegen and swift-sh. 2) Create separate tools modules for each tool. 3) Roll back using SwiftPM for my tooling needs and build scripts to maintain each tool.

#1 would have taken a long time to resolve and didn't seem super worth my while. #2 would have been slightly more tolerable but maintaining that directory structure didn't seem super worthwhile, and would have likely necessitated scripts for each tool anyway. #3 felt like the right choice in the end, and that's what I went with.

The upshot of going the way that I did is that I can always ensure that I'm using a binary version of each tool, and if I come across one that's not written in Swift I can add that to my project with the same amount of effort as a Swift-based one. Using SwiftPM for tooling like this was always kind of a hack. It was meant to manage dependencies of your project and to easily bring in libraries to your codebase.

I'd love for it to work again someday, but for now I'm mostly glad to have gotten over this hurdle so I can move on to more interesting problems like adding Dark Mode to Scorebook.

I pushed the change I made to my iOS template, and if there's a better way to write the bash scripts I'm all ears (I'm sure there is, since I'm a bash noob still). Check out the updated code here.

Scorebook 2.0 Preview

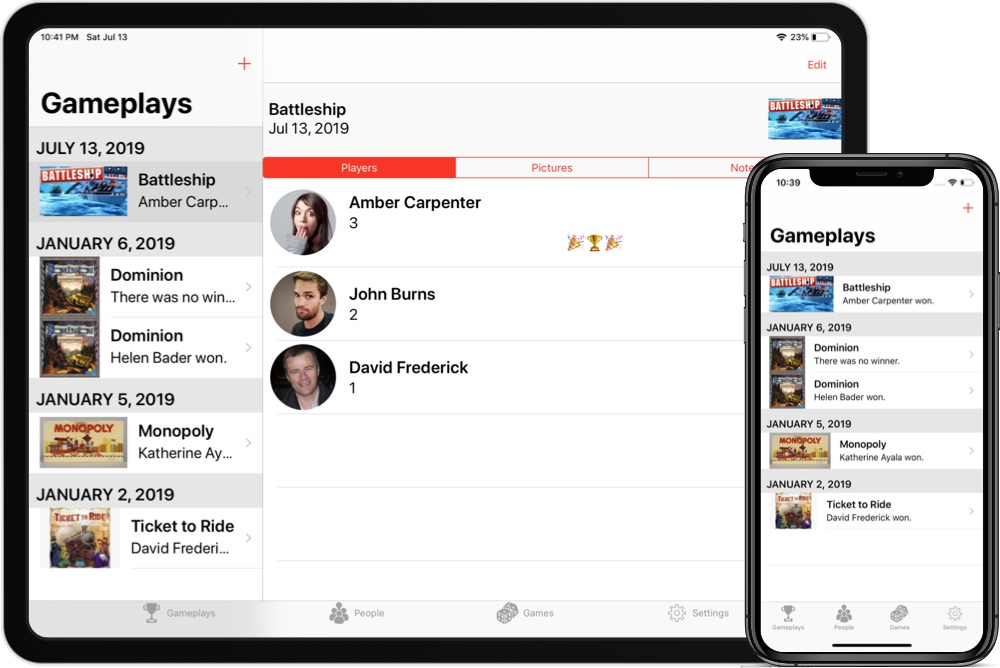

I've been hard at work over the last few months on a big update to Scorebook. It's going to have a whole new interface, work on the iPad, and support the Dynamic Type accessibility feature.

The first thing that is different is the main UI. There's now a tab bar which gives easy views to your gameplays, the games you've played, and the people you've played them with. It's a really nice way to visualize the games you've been playing.

The big driver of the big UI changes was putting Scorebook on the iPad. On the bigger screen you'll get a split view, with a list of things on the left, and a detail view on the right. And with iCloud sync (which is now enabled by default) you'll be able to see your stuff on any device. It's really great.

From Scorebook's initial 1.0 launch until now, I'd been using the Avanir font across the app. I really do like how it looks but the time has come to move to the iOS system font – called San Francisco. The main reason I did this is because I wanted to support users who like the text to be a bigger (or smaller) size than the default. Accessibility is a big deal. Without it, an app is harder to use or even unusable by people who rely on those features.

With all of the work that's gone into the app, and now with being on a whole new device, I've also decided to raise the price to $4.99 USD. I'm not sure what the higher price will do for sales but I'm hopeful that people will see value in the additional work I've put in to Scorebook.

While the release date isn't set yet, I'm in the final phase of the project – screenshots. Apple has so many different devices nowadays that this is actually a really complicated part of making an app (especially as a solo developer). But I'm pleased to say that I expect to wrap the screenshots tonight and submit the app for release later this week.

Scorebook 2.0 will be a free update to all users. If you want to get in at the existing $2.99 price you can buy it on the App Store today.